QUADCOPTER IN MARITIME RESCUE MISSION

The goal of this project is to configure and program a quad-copter able to identify shipwreck survivors and victims based on the GPS location of the last sighting. The quad-copter should be able to takeoff from a small boat, approach to the accident location, recognize human faces though artificial vision methods and send the location coordinates of the people to the operator. In order to do this, it should apply some covering algorithm that allow the robot to inspect a considerable sea surface in the shortest time possible to increase the chance of finding survivors.

First step: Coordinates system interpretation.

From GPS location to UTM location.

The last sighting of the accident location is known to be close to 40º16'47.23" N, 3º49'01.78" W (GPS Coordinates). We set off from a small boat that is in 40º16'48.2" N, 3º49'03.5" W. Here is the first problem that we have to deal with: we are going to use an API that does not support GPS Location. In order to interpret the coordinates of both the safety boat (we will need to return to it to charge battery when needed or once we have finished the mission) and the accident location, we will do a coordinate transformation.

Interpreting GPS Coordinate System

GPS coordinates describe a precise location on Earth's surface. They are expressed in 2 values:

- Latitude: Measures the distance North (N) or South (S) of the equator. Expressed in degrees (º), minutes (') and seconds ("). 0º is the equator, 90ºN is North and 90ºS is South Pole. Positive latitude values belongs to the northern hemisphere, while negatives to the southern one.

- Longitude: Measures the distance East (E) or West (W) from the Prime Meridian in Greenwich (England). Is also expressed in degrees (º), minutes (') and seconds ("). Positive values belong to the east of the Prime Meridian, while negative ones to the west side.

As the API of the drone has commands to move the drone specific x, y and z meters, we will need to transform these GPS coordinates to a coordinate system that is based also in meters, to simplify the logic of the program. The best option is to use UTM (Universal Transverse Mercator) coordinates:

Interpreting UTM Coordinate System

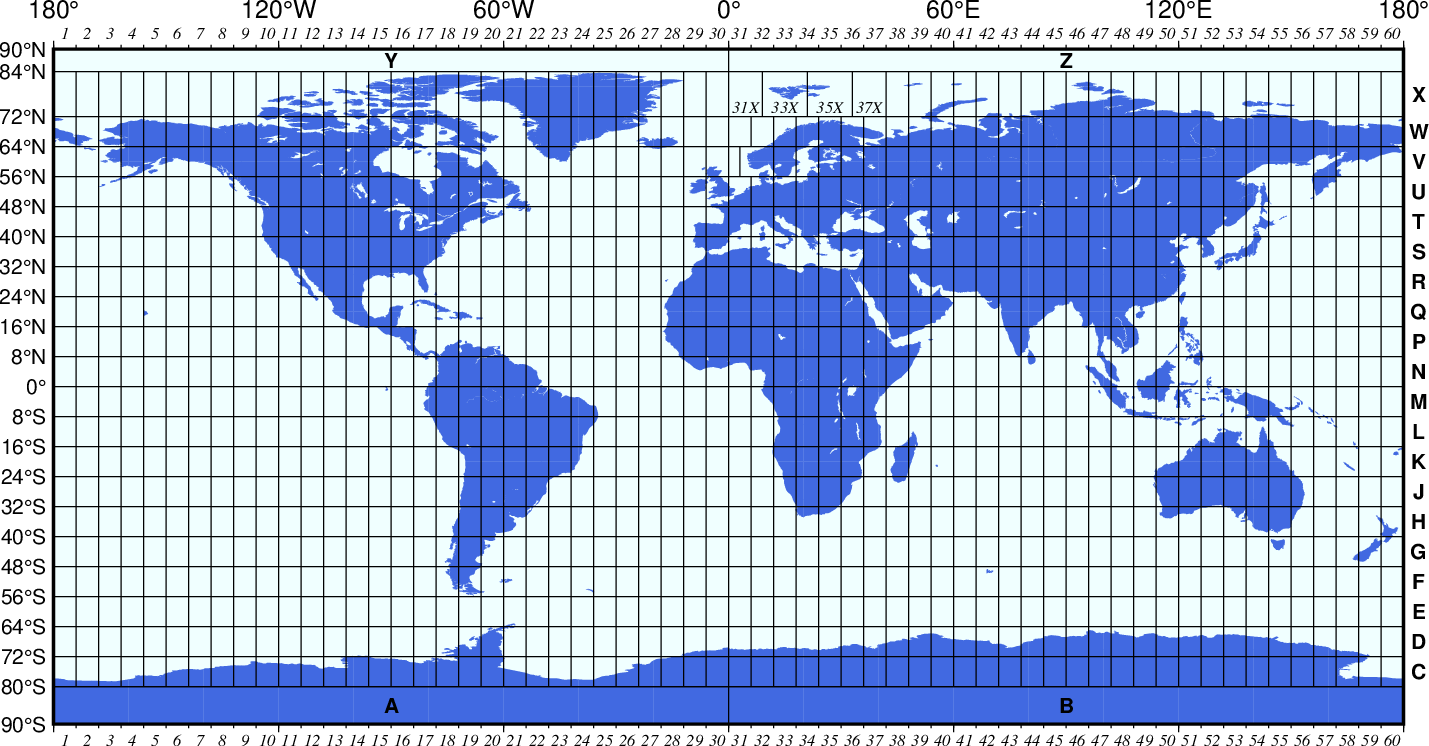

UTM is a coordinates system that divides the Earth on rectangular zones. Each of this zones are labeled with a number ranging from 1 to 60, starting at the 180º meridian:

To locate a UTM coordinate, first we determine which UTM zone is the location of interest. Once we know this, we express these coordinates in meters, using again two values:

- Easting: Meassures the distance (in meters) to the East (E) from the reference easting point (0º).

- Northing: Meassures the distance (in meters) to the North (N) from the equator (0º)

So, we transform the GPS coordinates to UTM coordinates:

Now, we can compute the difference of the boat location and the accident location in UTM coordinates to know exactly the distance between these points in meters. These difference will be used to command where the drone has to move to.

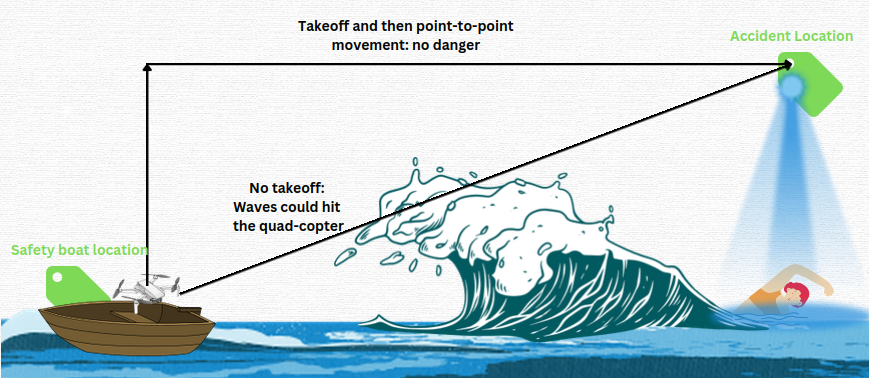

Before moving the drone to the accident location, we will need to command the quad-copter to takeoff from the boat. This is necessary if we want the drone to start the point-to-point movement from an altitude that avoids some risks as the sea waves. Keep in mind that the chosen altitude should not neither too low (waves could hit the drone and mess up all the rescue mission) nor too high (we will be using artificial vision algorithms to recognize humans faces through the ventral camera of the drone. Is the flight altitude is too big, face recognition could not work with precision):

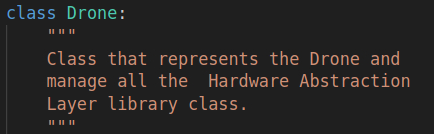

As a first version of the program, I created a Drone class that represents the drone itself and manage all the Hardware Abstraction Layer (HAL) library class. This is: getting the current robot position, orientation, velocities, landing state and camera images, as well as creating functions to command drone takeoff, landing, position or velocity, etc...

Each iteration of the main loop I will update the robot information with drone.updateState(). By doing this, I just need to call a function of the drone class to have all the information updated in every iteration for the upcoming navigation tasks:

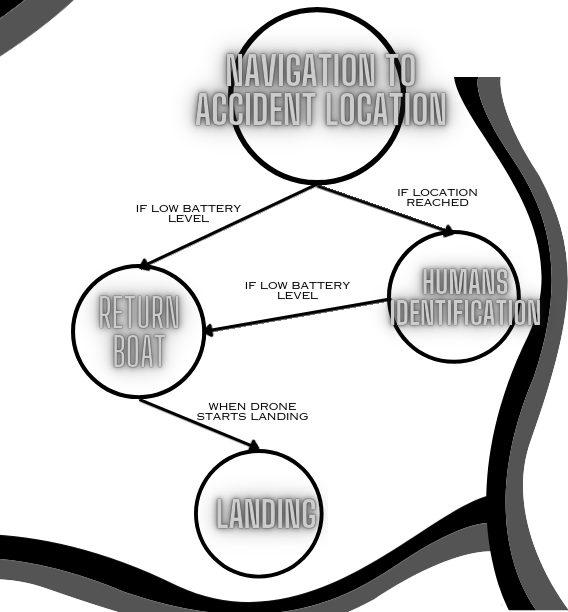

Also, the program will be implemented using a finite state machine (FSM). The FSM will be divided in the following states:

- Navigation to accident location: The drone will takeoff from the boat and will approach to the accident location

- Humans identification: The main state of the program. Once the drone has reached the accident location, will start a navigation algorithm and a human face identification method to locate possible victims around that specific coordinates.

- Return boat: When the drone reaches a low battery level, will return to the boat location.

- Landing: Once the robot returns to the boat location and is landing, it will resume the information of the mission.

Second step: Covering algorithm to inspect sea surface

Once we move the drone to the accident location, is time to cover the much area as possible in the minimum time possible to increase the chance of finding survivors. This will depend mainly in two things:

The path to follow up by the quad-copter. We can do systematic wipes in one and another direction like in the BSA following the priority order: [DIR1. -DIR1, PERPENDICULAR_TO_DIR1, -PERPENDICULAR_TO_DIR1] or we can do spiral movements from a certain center point.

The velocities to command to the quad-copter. High velocities could affect on the human identification algorithm due to the motion of the captured image, and low velocities would end up in not efficiency movements and less possibilities of finding survivors.

In the case of the path to follow, the main problem of using BSA algorithm or similar is that we don't have any obstacle to know when we have to start changing direction, and we should have to decide an arbitrary maximum distance to know when we have to change the direction. Due to this reason, we will apply a spiral path.

In the case of the velocities to apply, we will use a set of velocities in the X and Y axis in line with the motion of the drone that also allow us to go considerably fast.

TYPES OF SPIRAL

There's a large variety of spiral that we could use for the drone navigation in the XY plane. The ones that we could use are:

- GEOMETRIC SPIRAL: Increases or decreases the spiral radius at a constant rate. This rate could be managed by time or by distance.

- LOGARITHMIC SPIRAL: Geometric spiral that increases or decreases according to a logarithmic function.

- ARCHIMEDES SPIRAL: Spiral whose radius increases steadily as it moves away from the central point.

We will discard the logarithmic spiral because it could end up in not detecting humans as we get far from the center of the spiral (initial point). Survivors that are a little bit distant from the center point of the spiral (this is, the last sighting point of the accident) could not be detected.

For a first version of the program, we will start by doing a simple geometric spiral and using a constant increasing rate controlled by time. Once the drone has reached the commanded position of the accident, it will start a spiral movement, with SPIRAL_INIT_VEL in both x and y, that will increase a constant SPIRAL_VEL_INCREASE each SPIRAL_TIME seconds. For the yaw rate of the drone, we will use a constant SPIRAL_ANG_VEL rads/s.

Here is a demonstration of this movement (TIP: Play the video x2 speed for a better observation of the spiral path):

Third step: Human faces recognition. Haar Cascades

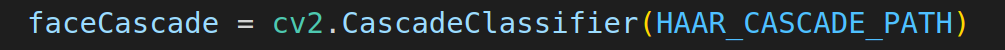

Now its time to run the human faces recognition method using Haar Cascades openCV method. This method needs to use a XML file that we can found in the RoboticsAcademy GitHub, so we can just pass the path to the Cascade Clasifier to load the necessary data:

We will do all the detection stuff in a function called detectFaces(), passing as parameter the drone object and the list of faces that we already detected. Notice that we want only to the detect the same person once, so we will need to ignore when we are detecting a human that we already detected in the past.

As a first approximation I got the XY robot position as the estimated position of the human detected. The error related to this estimation depends in the flight altitude we are using (the more altitude, the more noise in the coordinate estimation), and also the pixels of the ventral image where we are detecting the human (if human face detected is far from the center of the image, the estimation is worse).

But, even Haar Cascade is an useful and simple method to use, we have two big problems related to it:

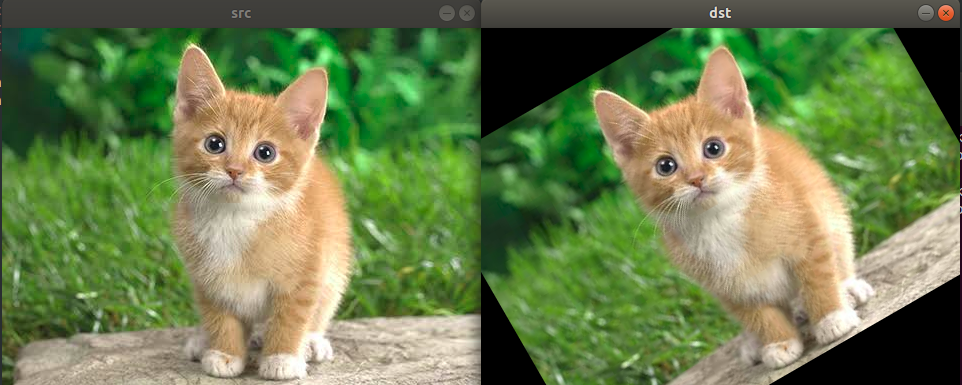

PROBLEM 1: HAAR CASCADE ONLY DETECTS VERTICAL HUMAN FACES

The drone will be describing a spiral during the face recognition, and the possible humans to find can be orientated in any direction. To solve this problem, we will rotate the ventral image N_IMAGE_ROTATIONS times to detect any face captured in the ventral image. The angle of each i rotation will be i*(N_IMAGE_ROTATIONS / 360).

PROBLEM 2: HIGH COMPUTATION WORK

Each iteration of the main while True loop we are taking the updated ventral image, and checking N_IMAGE_ROTATIONS times if there is any human face in the image. This cause a extremely high computation work that somehow needs to be reduced in order to avoid reducing significantly the autonomy of the drone and extend the time that can fly during the rescue mission as much as possible.

To solve this problem I had the following hypothesis: when the spiral movement task + face detection starts, the drone is in the accident location. As we are in a maritime rescue mission, most of the time the ventral camera of the drone is going to capture just full sea surface image (this is, a completely blue image), so we can check when a minimal of variance of the image pixel is to apply the face detection:

- Variance of an image pixels: Refers to the

statistical measure of the amount of variation or spread in the pixel

values of the image. In other words, it provides a quantitative measure

of how much the pixel values in an image deviate from their mean

(average) value. If an image is full of the same color pixels (even if there is light changes around all the image), the variance value will be low.

Using cv2.meanStdDev(image) OpenCV2 method, we can obtain the mean and the standard deviation (σ) of an image. We can compute the image variance using the formula: VAR = σ². Now, we will only check the captured images that exceeds a VARIANCE_IMAGE_THRESHOLD:

After some troubles using the first approximation method to estimate that

Human Coordinates ≈ Drone Coordinates In Detection Moment

I decided to change this method: For a better precision, I got the difference in local coordinates from the center point of the ventral Image and the corners of that image, to know exactly in our FLIGHT_ALTITUDE what is the real difference in meters between detecting a human face on the center of the image and detecting it in another position of the image. With this information, I compute the estimated local position of the human detected taking in consider:

- The position of the drone when the image was taken

- The position in pixels of the human face center in the image

- If the human was detected after some rotation, the rotation angle of the image.

- The yaw orientation of the drone

With all this information I compute the real estimated local position of the human detected. Now I have a little bit more precision to distinguish between one human and another.

Fourth step: Battery control of the drone

We are in a rescue mission where we do not know how many victims can be in the accident location, so we won't return to the safe boat if we detect X amount of people, but we will return when the drone battery is too low.

In order to simulate the drone battery level, I take in count the estimated drone battery of nowadays drones, the computation work that is doing (once we have applied the variance threshold functionality), and the commanded constants velocities during the spiral path.

I introduce in the program a list of constants related to the battery. The most importants are:

- BATTERY_LVL_RETURN_BASE: Indicates, in % of battery, when the drone needs to return to the safe boat.

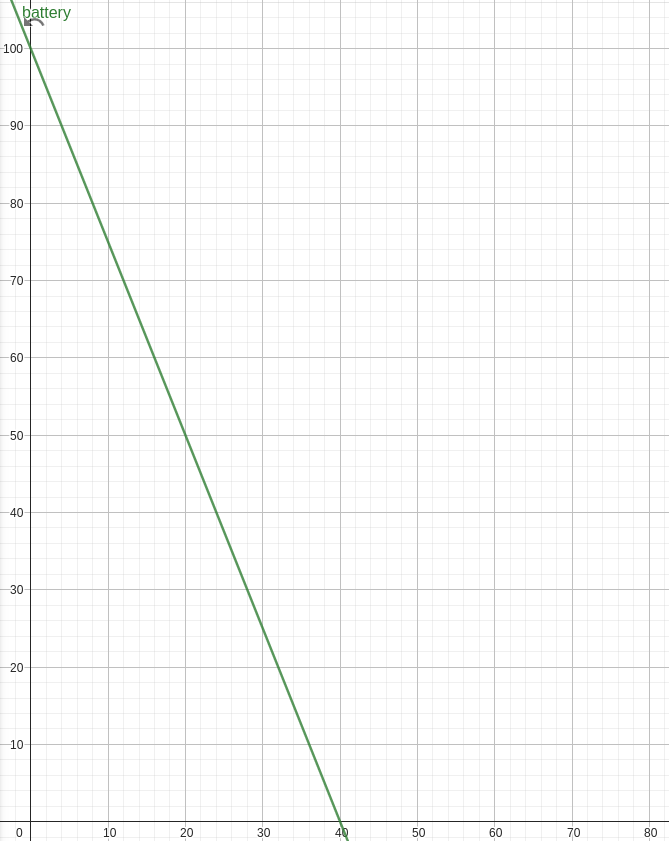

- BATTERY_DECREASE_RATE: Indicates the discharge battery rate, that follows the formula: y = 100 - x*t, where y (Output) is the % of battery remaining, t the time in seconds, and x the BATTERY_DECREASE_RATE.

Here is an example of how the drone battery will discharge using a BATTERY_DECREASE_RATE=2.5:

With this rate value, the drone will have an autonomy of 40 minutes. If we indicate, for example, a BATTERY_LVL_RETURN_BASE=15 (%), this will mean that we will, approximately 5 minutes of autonomy remaining to return to base.

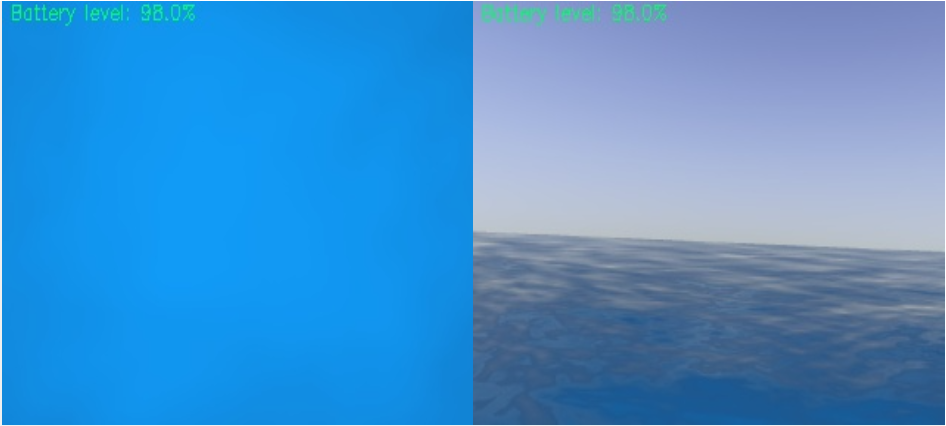

For a better interpretation and monotoring, I added the battery level in the displayed OpenCV images in the interface. This battery level is updated every time it has to decrease in 1% (BATTERY_UPDATE_RATE constant):

Once the BATTERY_LVL_RETURN_BASE percent is reached, the drone will display a message indicating that is returning to base (and will print a timestamp in UTC time), command the drone to go to safe boat location and change the FSM state to RETURN_BOAT.

During this state, we will check if the drone has reached the commanded safe boat location to take off. Once landed, we will go to the final state of the FSM (LANDING state). Here we will print a resume of the number of humans detected, with their related information (UTC time when they were located and the estimated position in local), before finishing the program.

Here's an example of this implementation: the drone will return to base when battery is <=97%:

DEMONSTRATION VIDEO OF THE PROGRAM:

In the following video you can see a full demonstration of the working program (drone take off, approximation to accident location, navigation and human faces recognition). Notice it doesn't show return functionality, as in this video demonstration the BATTERY_LVL_RETURN_BASE=15: